AI infrastructure for the enterprise

Traditionally, organizations have selected a single computing provider on which to base their operations. This may have been one of the top four cloud providers or an on-premises computing cluster. Once committed, the company would build its entire infrastructure using that specific computing provider's services. At that time, the provider offering the most cost-effective hardware, extensive services, or quickest implementation would secure the contract. However, in today’s landscape, cloud computing has become a standardized service, and organizations now have more compelling reasons than ever to explore developing products across multiple cloud platforms.

Handling mergers & acquisitions

In 2018, Microsoft acquired GitHub. On the day of the acquisition, Microsoft reiterated its intentions to maintain an open platform. To this date, GitHub’s existing infrastructure continues to rely heavily on AWS.

This is in no way a unique situation; enterprises frequently acquire other companies, and the rate of acquisitions is steadily increasing. Mergers pose significant challenges for IT departments that multi-cloud solutions can address:

- Mergers often lead to a diverse IT infrastructure, making multi-cloud solutions advantageous for managing various systems and applications from different entities.

- Multi-cloud offers flexibility for enterprises undergoing mergers to integrate and manage disparate cloud environments effectively.

- The necessity of multi-cloud arises from the intricacies involved in merging different technological ecosystems within enterprises, demanding a versatile cloud strategy.

Enabling pricing negotiation

Often, using multiple cloud providers may be seen as a strategic advantage by organizations to maintain pricing negotiation power. For example, in 2011, Apple publicly stated it was running iCloud on both AWS and Azure. Experts speculate that part of the reason, besides reliability and high availability, was to have leverage for price negotiation with both providers. Apple has since parted ways with Azure and implemented a dual cloud strategy with AWS and GCP.

Avoiding vendor lockin

Standardization in cloud services is hard, and there are often subtle to considerable differences between similar services, such as AWS EC2 versus GCP Compute and AWS IAM versus GCP Service Accounts. These differences compel customers to create custom solutions that are not readily transferable. Organizations may become tied to a single cloud provider without proper oversight, making it significantly more challenging to migrate or offer services on alternative clouds. This becomes particularly crucial when companies need to reduce their cloud bills, as Snap, Twitter, and others learned.

Scaling out on-premises clusters

Many enterprises require dedicated data centers due to high regulations in their industry (e.g., pharma, finance), security compliance needs (e.g., defense organizations), or economic viability. Typically, these enterprises also implement solutions that span on-premises clusters as well as cloud providers for scale-out or R&D.

Accessing GPUs

With the advent of LLMs in late 2021, a race to acquire GPUs ensued. Enterprises raced to fine-tune and serve memory-hungry large models. Multi-cloud emerged as a way for enterprises to gain access to GPUs in a starving market. Nvidia in 2021 spoke of the supply chain constraints fundamentally forcing enterprises to figure out innovative ways to solve the shortage of GPU problems.

The promise (and pitfalls) of K8s

In 2021, a survey conducted by US cloud computing firm Nutanix revealed that 83% of the 1,700 IT decision-makers polled agreed that a hybrid multi-cloud approach was ideal. This continues to hold true as of January 2023, with over 60% of IT Departments already running multi-cloud applications.

K8s emerged as the solution for workload portability due to its standard API and extensive set of abstractions. These enable the running of services, batch workloads, and stateful applications on any cloud. Cloud providers swiftly implemented and offered managed K8s distributions on their platforms (EKS on AWS, GKE on GCP, AKS on Azure, OKE on OCI… etc.).

Nevertheless, adopting K8s presented various challenges.

Infrastructure management

End users (particularly data scientists and ML engineers) are exposed to internal infrastructure concepts as consumers of K8s. This leaky abstraction means users can no longer focus solely on business logic (e.g., how to train a model) and are instead forced to learn about K8s YAML, deployments, kubectl, and more.

LinkedIn has one of the strongest developer cultures. After recognizing these limitations in existing tools, LinkedIn has moved its ML Pipelines to Flyte and contributed an interactive VSCode experience to give users access to infrastructure for iteration and debugging using the same code, requirements, and configurations they use for production.

Not everything is on K8s

In an ideal scenario where users only have to learn one tool for all tasks, incorporating K8s into their skill set might have been feasible. However, not all services that users require can be operated on K8s. Databricks, for instance, has its own APIs for managing clusters or notebooks. Similarly, Snowflake is another example where users must acquire knowledge about submitting, monitoring, and utilizing query responses.

User separation

While K8s supports multi-tenancy, the actual implementation of multi-tenancy is an exercise left to the user. This leads to, in many situations, wrong or incomplete implementations, leaking of user data, or resource contention or starvation.

Federated K8s is still a WIP

Each K8s cluster is a single cloud cluster. K8s doesn’t natively offer tools or constructs to build out a multi-cloud setup. Despite being worked on for years, Federated K8s has yet to provide the seamless experience users are looking for when interacting with a single pane.

While K8s paved the way to unlocking the power of multi-cloud, it has left a lot to be desired.

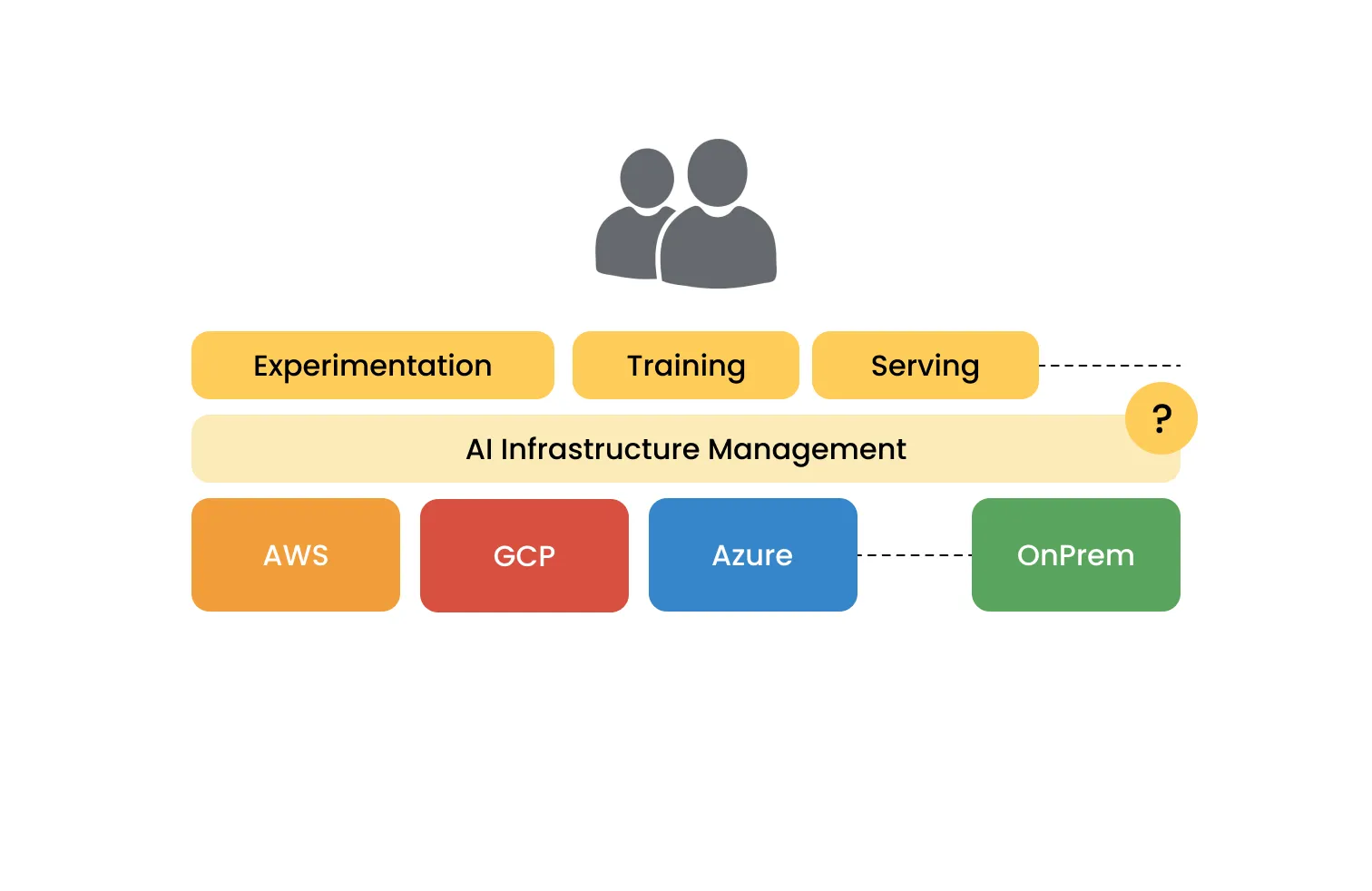

The solution

One insight we at Union had while working with many Enterprises within the Flyte community is that many organizations would benefit from using Flyte as an interface that abstracts the cloud and k8s details while running within their own Compute infrastructures. Further talking with them, we saw that many had hybrid, multi-cloud setups.

The split control plane / data plane architecture of Union allows organizations to connect different cloud dataplanes to a singular Union tenant. This makes it possible to unify different cloud providers onto a single endpoint. Users thus have to simply send workloads to a consistent API / endpoint, and workloads can move to the ideal service provider based on pre-configured routing rules. For example, Union supports per-project routing to a cloud account.

Users can interact with Union as their primary interface, facilitating collaboration across teams and organizations. They can effortlessly share knowledge, code, and artifacts without needing to migrate to a specific cloud provider, as the return on investment for such a move is generally insufficient to justify the effort.

While working with the users and at Lyft, we had already found various limitations with K8s for stateful data applications. The rate at which pods can be created, and the scale and burstiness of AI workloads affect K8s performance negatively. Flyte was designed from the ground up with these issues in mind, and the core engine implements many techniques to ensure that these applications can continue to scale. For example, FlyteAgents is a framework that avoids launching pods for integrations with external services. Flyte also ships with multi-cluster support out of the box.

With Union, we wanted to make it easy to add multiple clusters and have specific routing rules that would allow development and critical production workloads to move to different K8s clusters to avoid second-order scale effects.

Thus, Union allows a much more flexible multi-cluster scale-out model (figure 2). It allows for cluster upgrades and improvements with zero downtime and almost no human intervention. This is necessary at Union's scale as we run 100's of K8s clusters for our customers. This architecture also sets Union for a lot of future innovations like priority scheduling, policy, and load-based routing and allowing users to unify the various compute resources using a singular platform.

Conclusion

Traditionally, enterprises have relied on a single compute provider for their infrastructure, but in today's world, there are more reasons than ever to consider building products on multiple clouds. Union provides a single pane of glass for customers to abstract infrastructure in an increasingly multi-cloud multi-cluster world offering standardization, efficiency, correctness and reliability. Learn more about how Union can help you deploy, run and scale any AI workload at Union.ai.