Flyte is a workflow orchestrator that unifies machine learning, data engineering, and data analytics stacks for building robust and reliable applications.

Today, we are thrilled to announce the official launch of Flyte Agents, a new extensibility framework that simplifies integrating any hosted service (2p or 3p) into Flyte workflows.

Background

AI workflows often require custom infrastructure that evolves rapidly across use cases. For example, new LLM-based workflows may perform data extraction on CPUs but run inference using OpenAI APIs. Time series forecasting workflows necessitate access to data warehouses such as Snowflake for data transformation with Spark on Kubernetes, whereas computer vision models require GPUs for training.

Flyte was built to handle these diverse use cases. Since 2016, it has evolved to separate concerns between users and infrastructure teams:

- Users declare required resources like data, models, and compute without worrying about specific backend services like Databricks or AWS. They focus on the capabilities needed rather than vendors.

- Infrastructure teams manage services and map user declarations onto them as plugins. For example, Spark workloads could run on Kubernetes, Databricks, EMR, or GCP Dataproc.

This separation avoids tight coupling between code and infrastructure versions. Platform teams can update backends without changing user repositories, an advantage highlighted in this video by Spotify’s engineering team, explaining similar data orchestration problems.

Flyte's architecture reflects this separation of concerns:

Flyte’s existing Golang plugin architecture has several key advantages for handling varied use cases:

- Performance and Cost: Minimizes unnecessary containers for remote job launching/monitoring

- Lifecycle Management: Centralized plugins enforce quality and correctness versus user-written plugins. Built-in ability to abort jobs controls runaway costs from unchecked Spark/SQL queries

- Flexibility and Portability: Migrate between service providers smoothly (e.g. Qubole to in-house Spark)

However, there are also some challenges:

- Limited Contributions: Golang backend hinders contributions from Python-first data teams

- Testing Overhead: Plugins need full clusters for integration testing

- Local Development Constraints: Plugins only work on remote clusters, hampering local testing

- Ecosystem Incompatibility: Unable to leverage Python community plugins for Airflow, etc.

Introducing Flyte Agents

Using a new system for extensibility, Flyte Agents function as intermediaries between Flyte and external systems that facilitate task execution. Over the past few months, we have worked with the Flyte community to iterate on and refine the Flyte Agent framework and are ready to make it generally available.

Flyte Agents are long-running, stateless services that receive execution requests via gRPC and initiate jobs with appropriate services. This eliminates the need for individual sensor pods or other external communication, saving significant resources. Agents scale up/down with demand. To ensure recoverability, agents send task state and metadata back to Flyte Propeller, which persists information to the database. This guarantees workflow state is securely stored and can resume after agent failures or scale downs.

Agents solve a number of the challenges with the existing Flyte plugin architecture. One of the biggest advantages is local execution. Since Agents can be spawned in process, they allow for running all services locally as long as the connection secrets are available. Moreover, Agents use a protobuf interface, thus they can be implemented in any language, providing a lot of opportunity for flexibility and reuse of existing libraries, as well as simpler testing.

Agents connect Flyte to any external or internal service in a standardized way. They launch jobs on hosted platforms like Databricks or Snowflake and call synchronous services like access control, data retrieval, and model inferencing.

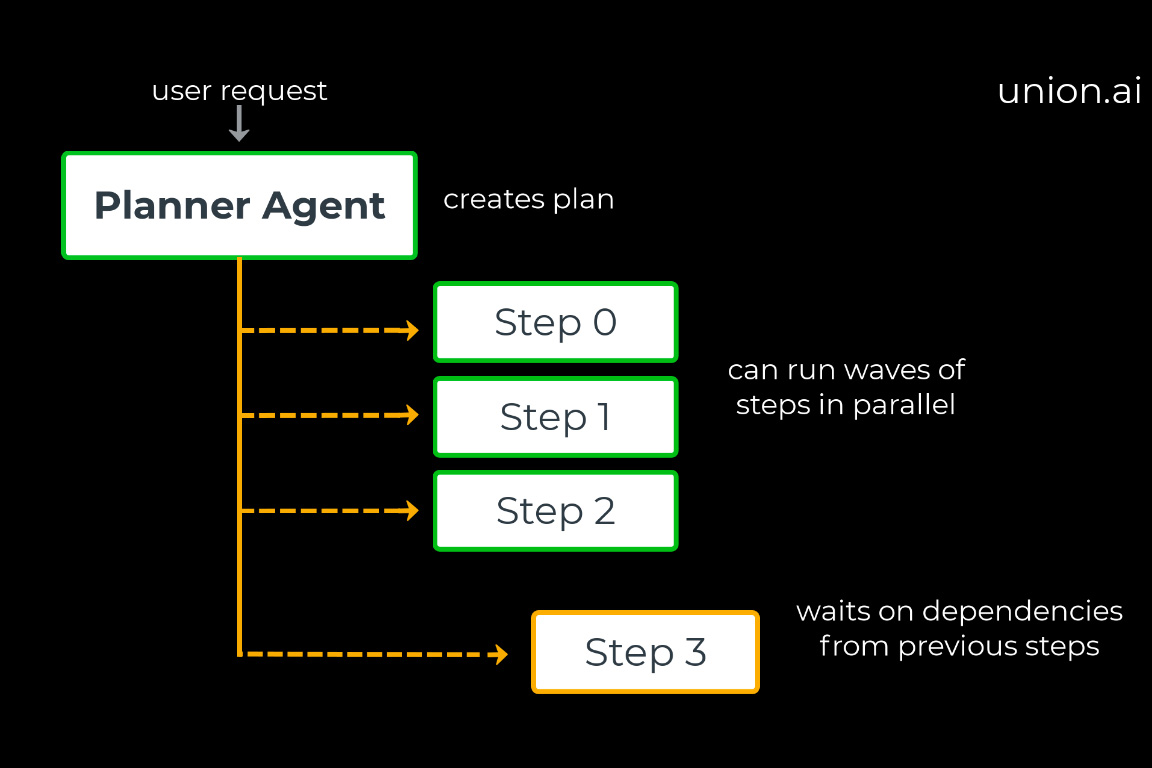

Specifically, agents enable two key workflows:

- Async agents enable long-running remote jobs that execute on an external platform over time (e.g. Spark workloads on Databricks).

- Sync agents enable request/response services that return immediate outputs (e.g calling an internal API to fetch data).

Developing Flyte Agents

Flyte provides a Python toolkit that simplifies developing an agent. Rather than building a complete gRPC service from scratch, an agent can be implemented as a simple Python class.

Depending on the agent type (sync or async), the interface for an agent only needs a single method; do, or at most three methods; create, get, and delete.

Example async agent that can launch and monitor a long running job, like a query on Snowflake:

Example sync agent that returns a response immediately, like creating a SagemakerModel:

Agents simplify interacting with both vendor-hosted software and internal company systems. Their standardized interfaces and light overhead streamline cross-system orchestration.

Next steps

Looking ahead, we will cover specific agent development patterns that empower users to author their own lightweight integrations. For those eager to get started, our documentation provides an in-depth guide, and you can check out our growing list of available agents here.

Flyte Agents enable unified orchestration across the motley collection of services that constitute modern technology stacks. Whether calling a SaaS application or connecting internal databases, they eliminate the need for one-off integration. With centralized logic and reusable tooling, they constitute the fabric that weaves Flyte into an organization's entire data and ML stack. We're excited to see all the integrations the community creates leveraging this new capability!