Union.ai CEO and Co-founder Ketan Umare recently joined other machine learning experts in one of Kubernetes authority Kelsey Hightower’s Twitter events titled Machine Learning in Production.

The group included Flyte creators and maintainers, industry experts, and practitioners from companies such as Stripe, HBOMax, Blackshark.ai, and Merantix. They tackled a variety of data and machine learning topics including what a machine learning model is, how to iterate, the importance of reproducibility and scalability, and accounting for ethics within these models.

Kelsey set up the conversation: “This whole world of machine learning is pretty new to me in general. I've always had questions around, ‘What does it look like in production, end to end? How do we go from a bunch of data to these really cool APIs, things like language translation or things that can convert video or search video?’ I know a lot of people think machine learning is important, but how does it work in production?”

The case for ML models

Here’s how Ketan and the other speakers approached the topic.

“What sets machine learning apart from traditional software is that ML products must adapt rapidly in response to new demands,” Ketan said.

“A phenomenon that I often see is that machine learning products deteriorate over time, while software products improve with time. If you have the best model today, it's not guaranteed to be the best model tomorrow.” For example, Ketan said, the COVID-19 pandemic caused massive disruption to existing models of customer behavior, forcing companies to change them rapidly.

“We wanted to build a system that would help people handle this kind of complexity, this different modality of operations, and that's what led to developing Flyte.

“Union.ai’s mission is to make the organization of machine learning so easy and repeatable that teams of any size can use it to create products and services. We want to help companies scale their use of ML. And as more and more companies rely on machine learning and are creating models, it’s becoming more important to use a solid foundation and understand the nuances of modeling.”

Ketan first put ML into practice at scale at Lyft, where he was tasked with figuring out a way to tell users their estimated time of arrival within the app. But with the variables at play (traffic, driving habits, road conditions, different cities, and terrains) his machine learning model had to be updated constantly.

Arno Hollosi, CTO at Blackshark.ai, described another journey to ML models driven by the need for constant updates. His team helped to create the 3-dimensional world used in Microsoft’s Flight Simulator that leverages satellite imagery from Bing Maps and combined it with an ML model that detected 1.5 billion building footprints. But they used satellite and aerial imagery to extract information about building infrastructure, land, use, and other variables that are constantly changing and need to be updated.

Both of these examples describe the need for ML models. Why? Because your model is your foundation — it allows you to replicate, test, and tweak. These are the key things to consider when building ML models that go into production.

Setting the foundation

First things first: Your model needs a foundation — and one that is reproducible.

Ketan described how to set yourself up: “First, determine an objective and have a fast iteration system. Then, make sure that things are abstracted and you have the ability to deliver to production. It’s also key that the people involved are working together and there is no loss of accountability — for example, if person A develops a model and person B deploys it to production, and then B feels frustrated when things break.”

Finally, when you’re in production, make sure you're monitoring for your objective. It’s also important to do this again and again and in parallel, because you may have differences within the system, Ketan said.

Ketan offered the following example from Lyft: New York City and San Francisco are both cities in the US, but they needed different models for them because of their variables. So an ideally identical pipeline needs to be able to handle these variances among models.

Setting a solid foundation requires you to understand your objective and learn to work as a team so the model can be reproduced and ultimately scaled.

Reproducing your model

As Ketan said, models deteriorate and need to be updated, which makes reproducibility key.

Fabio M. Grätz, who leads MLOps at Merantix Momentum, agreed. “I transitioned from the machine learning world into the ML ops world because we realized that value comes only when machine learning models are actually put into production,” he said. “Like Ketan said, machine learning models deteriorate over time and decay in performance. So we realized that the most important thing is the ability to be able to update them quickly.”

Quickly updating these models is what makes reproducibility so important — and why it was discussed at length.

The worst-case scenario is not being able to reproduce your model exactly as it was created. In a real-world example, Ketan shared how losing one team member caused a delay because, without that person, they couldn’t figure out how to reproduce the original model precisely.

Ketan said that infrastructure abstraction is the key to unlocking the capacity of a team. He suggested first assembling a team to determine the objective metric that you want to optimize.

“Start with the smallest thing first, build an end-to-end pipe that says, ‘Okay, I can try different ideas quickly. I can back-test my hypothesis against older data sets. I can compare it to the real world and what I'm observing.’ Then the next part of the pipeline is, 'Now that I have set this up, I’m able to iterate.’ Now let's make sure that when you deploy to production, you can still monitor and make sure things are going well,” he said.

Training the model

Once you have a foundation, it’s important to be able to test and reproduce it, the panelists said. That entails training the foundational model you’ve built. It needs to be tested, trained, and reconfigured in order to get to the final result that you can deploy.

According to Arno, training a model is more like developing source code. “You write your code to define the model. Once it's checked in, you need to feed it with training data. So you're launching machines and starting the training loop. The model is reading the training data and adjusting its internal weights.

“And the next step is doing a unit test. You then have a validation set and the test set of data to check if the accuracy is good enough. When the accuracy is good enough and it works on your validation test set, you’re able to deploy it to production.”

As Kelsey explained, that testing data helps the model get better and more accurate. This means the code generated from the training phase becomes the final source code that goes into the delivery pipeline.

But Fabio reminded the group that there are many variables in a machine learning system: ”You need to select versions of code changes and test them in the ML system. Of course, the training code that governs how your model is trained can change, but the configuration of the code can also change, and the data can change. So you need to have versioning for all of that.

“For example, you need to versionize the configuration for that code,” he said. “Even if you have the algorithm, even if you have to train in code, it doesn't mean that you can get the same level of accuracy. If you don't know how to cope with configuration, you also need to versionize data, and you'll need to also test data changes.”

Fabio suggested building a pipeline that validates the tests and validates the input data, trains the model, evaluates the model, and then validates that the model is good enough.

“What you should consider as the deliverable of what you're building is not the model only, but the entire pipeline that trains and delivers the model which touches on the whole reproducibility of these ML models and the decisions that they're making.

“Even if you have the algorithm, even if you have the training code, it doesn't mean you can get the same level of accuracy again if you don't know how the code was configured.”

Iterating on the model

Training and iterating on the model go hand in hand. The group had recommendations about how best to iterate, including using snapshots of data to manage and reproduce.

“As you track and test changes, you need to do it not only in code but also in configuration and data,” Fabio said. “Let's just say I'm using petabytes of data to feed into this process. Am I taking a snapshot of that data so I can include it in the reproducibility?”

Ketan suggested engineers keep snapshots constant for that phase of training. If you use different datasets, then you can't draw a conclusion about the result of the outcomes.

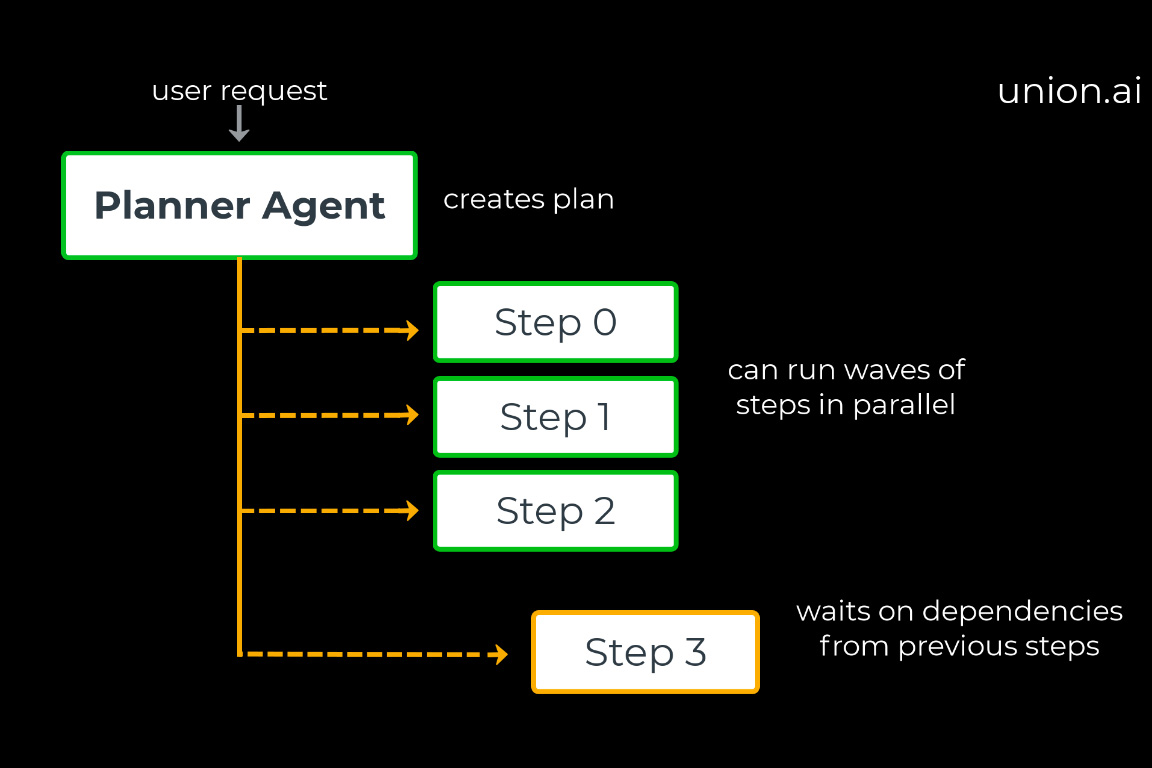

“The workflow primitive within Flyte makes sure that each of the steps produces an outcome that can be repeated, and yet it produces the same outcome that's encapsulated within the system.”

But Jordan Ganoff, an engineer at Stripe, warned that taking snapshots of petabytes of data is expensive. Instead, he recommended separating metadata from the data itself, which allows you to treat a version of a dataset as if it were code.

Most of the experts had other suggestions, including Evan Sadler, a data scientist from HBOMax. “This is actually one of the reasons I really like using Flyte. You can map a cell in a notebook to its own task, and they're really easy to compose and reuse and copy and paste around. Jupyter notebooks are great for iterating, but moving more towards a standard software engineering workflow and making that easy enough for data scientists is really, really important.”

Fabio added, “The great thing about Flyte is that you can write your workload in Python code and data scientists and engineers are typically very comfortable writing Python code. So, you can turn their Jupyter notebooks into Python modules and then say, ‘Hey I want to run this in the cloud now.’”

As you iterate, test, and tweak, remember what Fabio said: What you should consider as the deliverable of what you're building is the entire pipeline that trains and delivers the model.

Creating a scalable model

Once you’ve built your model, trained it, and iterated on it, you want to be able to use it at scale. But you have to consider the variables the model will encounter and reproduce your own models with accuracy.

Arno offered another example based on building the Microsoft Flight simulator. “Houses, trees, and landscapes look different in different parts of the world. The Earth is diverse, so a model that works in North America may not work in Asia,” he said.

He recommended having many different samples and monitoring your false positives when you start to scale. When you build a model on specific data points and then try to scale it into a new pool, you don’t know what the model is going to pick up on. “You don't know if the model was using the color. Was it using the texture? Was it using the size?” Arno said.

He went on to explain a problem his team encountered with water areas. “If it was windy, you saw waves, but for the model, it was more like noise and it randomly built trees, buildings, and roads inside this noise.”

Maybe a machine learning model is not enough, he continued. “Maybe you need additional information. We knew where the water is, so we just removed all of the false positives inside the water.”

Challenges to overcome

Machine learning models will inevitably include elements that are unexplained—you won’t always know what the model is picking up.

Gautam Kedia, who works in risk, machine learning, and engineering at Stripe cautioned about another challenge — bias. “It’s a hard problem to solve but easy to talk about,” he said.

Machine learning models have a tendency to become biased sometimes and we don’t understand how they make their decisions. There’s no way to track it, Gautam said.

“We've put in a lot of energy in the last year or so to identify certain sources of bias and mitigate them in our models,” he said. “It's never going to be perfect, but we have to keep working towards it.”

Want to listen to the full conversation? Find it here. We will talk more about Machine Learning in Production and related topics in an upcoming blogpost series about Orchestration for Data and ML. If you have any questions or comments please reach out to us at [email protected].

About Union.ai

Union.ai helps organizations deliver reliable, reproducible and cost-effective machine learning and data orchestration built around open-source Flyte. Flyte is a one of a kind workflow automation platform that simplifies the journey of data scientists and machine learning engineers from ideation to production. Some of the top companies, including Lyft, Spotify, GoJek and more, rely on Flyte to power their Data & ML products. Based in Bellevue, Wash., Union.ai was started by founding engineers of Flyte and is the leading contributor to Flyte.